I play at hackatons because it is the most effective, streamlined learning process. It may incur unhealthy amounts of redbull and coffee, and few sleepless nights, but you get so so much out of it. This one in particular was about running HPC stuff on aws clouds powered by Arm N1s, and I was coming into this after running some ML deep network stuff on Greene [NYU’s HPC] so I thought why not learn some slurm-fu? I was a bit hesitant as I thought this hackaton is not for me: it kinda hinted at the email that it is for people who already have some program they want to optimize or already know some stuf but I am a n00b and had no program in mind, but I am SO glad I dared to take part!

It did end up not being at all what I expected and not much of slurm-fu, the best way I can describe it is: you’ve seen people speedrun games right? Now imagine that but instead of Minecraft, it is compiling HPC software [seblov waves, ice sheets, monte carlo particle transports, all that good stuff] using SPACK and benchmark/ validate using ReFrame. And it was a great opportunity as there were mentors from NVIDIA, Arm, AWS, Spack and ReFrame helping out people to build apps, benchmark them and do scaling study, optimization etc. To this effect, the mentors were amazing, really awesome lads who were also extremely patient with my n00b questions: i.e. THE Todd Gamblin, the maker of Spack once calmly, corrected me using @ instead of % to specify compiler, this is a level of patience Dali Lama aspires for 🙂 – and don’t judge me! I also asked good questions –

Spack (not to be confused with Spock from StarTrek) is a package manager for HPC which is just magic, I think the ideas behind it and how intuitive it feels is just marvelous. So every package has a package.py that you can pry into to see how that thing is getting build, or tweak it if you may, and it is really neat: some stuff are Make-base, some are CMake-based, and we have all had our fair share of horror stories with these now Spack makes base classes for MakefilePackage, and CMakePackage and now you don’t have to fiddle with those in their ugliness, you can conveniently setup flags and whatnot in python now. Also Spack has this idea of virtual dependencies: you can depend on mpi but mpi is just an interface, so then Spack concretizes those virtual dependencies like mpi to an specific package that implements mpi, like openmpi. I could go on and on about the nice-ness of Spack, but I’d just leave at this, Spack is all you ever wanted from a package manager, and all you didn’t know you want but you very sure do! It’s not just the big things but also small things: ‘spack cd’ was among my favorite things: like everyone KNOWS often you want to cd into a pkg directory if you are looking for it, but nope, no ‘conda cd’!

ReFrame makes regression tests easy: want to do a scaling study? Compiler comparison? ReFrame is your friend. Blitzformation: you decorate a class with @rfm.simple_test [or fancier ones] and this class is your test: define functions inside this class and decorate them to run before or after run/sanity check/etc.

And as hackatons go, this one too was a competition. Of course people were there to learn/ do some pull requests to benefit mankind and all that, but you also want to give it your best shot, and me of course wasn’t there to compete as there were teams of 4, and I was a solitary lone n00b with probably the least experience of everyone in these things, *

I even balked at the idea of AWS last semester at AI course where we had the option to get some credits to run our project code there, I had a phobia of running over budget at being bankrupted by an AWS bill, and besides, isn’t on old-school on-prem more fun? The hackaton strongly tilted me and now I no longer wonder where the AWS hype comes from, it was fast, it was reliable, it was easy, and it just worked!*

Since I discovered that I can connect vscode to remote machines, it feels much like home. [I am emacs guy no more, that life is behind me] So anyway, I was just competing with my own and tried to show that even the solitary one man army can score a few points, I had multiple vscode windows opened at various directories, each with multiple terminals open for different things (i.e. one for looking at htop/ squeue, one for spack spec, one for debugging etc.) and made vscode settings.json to be able to breakpoint into ReFrame to see where I’m at and how it functions, and I gave it all, and as I tore through my Nth redbull of the hackaton and was pulling my last request I was happy that I participated.

As a closing meta-note, I think it is all so clear that the future is RISC, I’d go even further that it’s not going to be Arm trumping x86, but RISC is going to blast into the scene and kick some butts big time. Communities are important in this industry, the worst SIMD is better than the best SIMD if it is marketed so that people think it is better, you shan’t just make something cool and expect people to go through any lengths to use it, I did a project for a CV course where I tried implementing 2d convolution multiple ways {bland python, java, C++, Arm NEON, x86 AVX, OneMKL, CUDA}, [even included verilog on a Xilinx FPGA] and I ended up discovering OneAPI was not that good at all, and yet the way it was marketed I started out thinking OneAPI is THE BEST THING SINCE SLICED BREAD, humans after all, are the same species that gets psyched about shiny flamboyant candy wrappers, you gotta make nice docs, and I think this is something Arm is still behind: pitching their superior candy. Just compare OneAPI docs to what you find after googling about ArmPL! And Arm SVE is just undeniably a better way to do SIMD.

The contest repo, which also includes the recorded talks: https://github.com/arm-hpc-user-group/Cloud-HPC-Hackathon-2021

And an overview of the competition by ARM: https://community.arm.com/developer/tools-software/hpc/b/hpc-blog/posts/aws-arm-ahug-hpc-cloud-hackathon

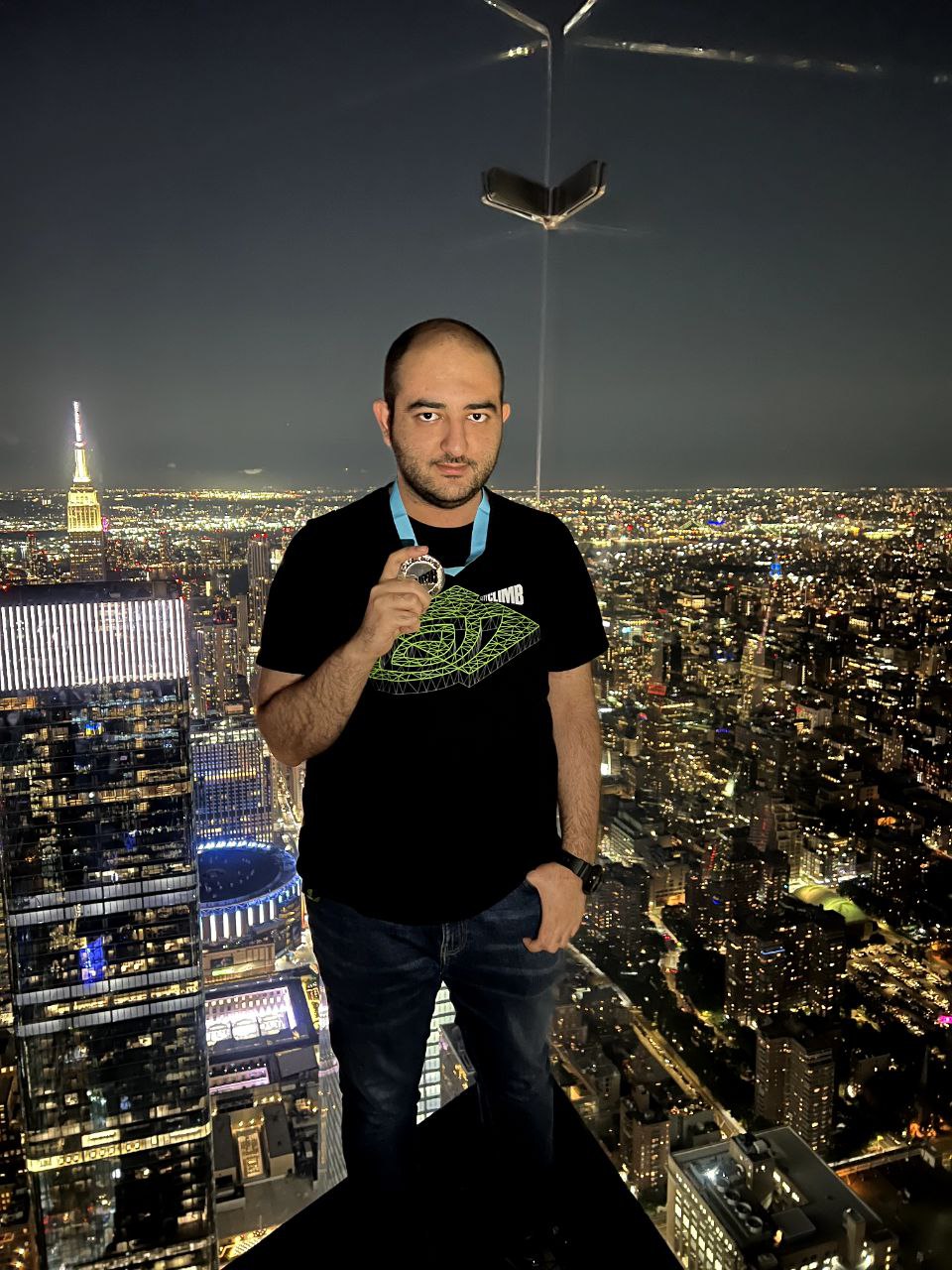

& yes I finished 3rd and won an iPad Pro.